CHAPTER 4 | Search Engines In A SEO Company

The Fundamentals of Search Engine Friendly Design & Development

Search Engines in a SEO Company

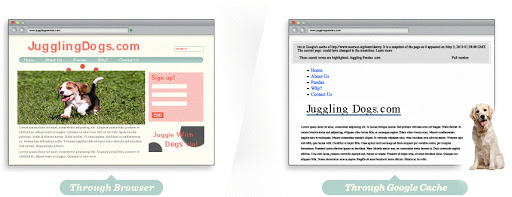

Search engines are limited in how they crawl the web and interpret content. A webpage doesn’t always look the same to you and me as it looks to a search engine. In this section, we’ll focus on specific technical aspects of building (or modifying) web pages so that they are structured for both search engines and human visitors alike. Share this part of the guide with your programmers, information architects, and designers, so that all parties involved in a site’s construction are on the same page.

Indexable Content

For web developers who want to improve the visibility of their web pages to search engines, web content should be in the HTML text format. This is the preferred option as users are more likely to favor written content, rather than images. Using the HTML text format guarantees the visibility of the contents of a web page to search engines, and, by implication, the enquiring users. However, HTML text format does not solely ensure the visibility of a web page. There are improved methods that can be instrumental to ensuring this, such as images, Java applets, and other contents that are not in the text format. Here’s a few of them:

- Provide alt text for images. Images should be presented in gif, jpg, or png format. These are specifically useful for providing explanations to the text.

- Supplement search boxes This can be done by using navigation and crawlable links.

- Supplement Flash or Java plug-ins: This is also possible through texts on the pages .

- Provide a transcript for video and audio content especially when the words used would be useful for indexation on search engines.

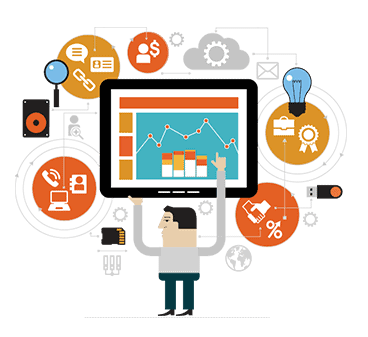

Seeing your site as the search engines do

One way to resolve an issue is to address it before it arises. In SEO, you as the web developer can do this by learning to think the way the search engines do. How will Google look at my website? Keeping this in mind is key to improving your site’s visibility. To help you out, tools such as SEO-browser.com are helpful to site owners. helping you learn to identify the parts of your website’s content that are visible to search engines and what parts are lacking. The actual appearance of a web page is accessible through Google cache. This allows the site constructors a view of their web pages as they’re found by search engines, and therefore, the users. Check Google’s text cache of this very page you are reading now to see the difference

“I built a huge Flash site for jumping dog Flash site but I hardly get found. What’s going on? ”

Whoa! That’s what it looks like?

As you can see, when we employ the Google cache feature, it becomes obvious that the homepage above, jumpingdog.com, is missing much of the information it originally contains. This way, search engines are unable to decipher applicability.

Hey, where did my content go?

Believe it or not, these are actually the same webpage. That beautiful image and text on the left has become an empty page on the right because there is no HTML text. Therefore, search engines can’t see it. (The text is not reaching anything in the search engines’ indexation.) It’s important to understand that it’s not just the contents of your text alone that will help a search engine find it. Without HTML format, your text will remain invisible.[Another thing to beware of: It is possible that you may start with see a well- designed web page, only find that the search engines’ view of the same web page a scattered, sparsely worded, unattractive mess.]

Now that we’ve learned what an integral tool Google cache is, make sure you’re double checking your website to make sure all your content is visible. In fact, this check, however, should not be limited to text alone. Visual contents and links should also receive attention.

Crawlable Link Structures

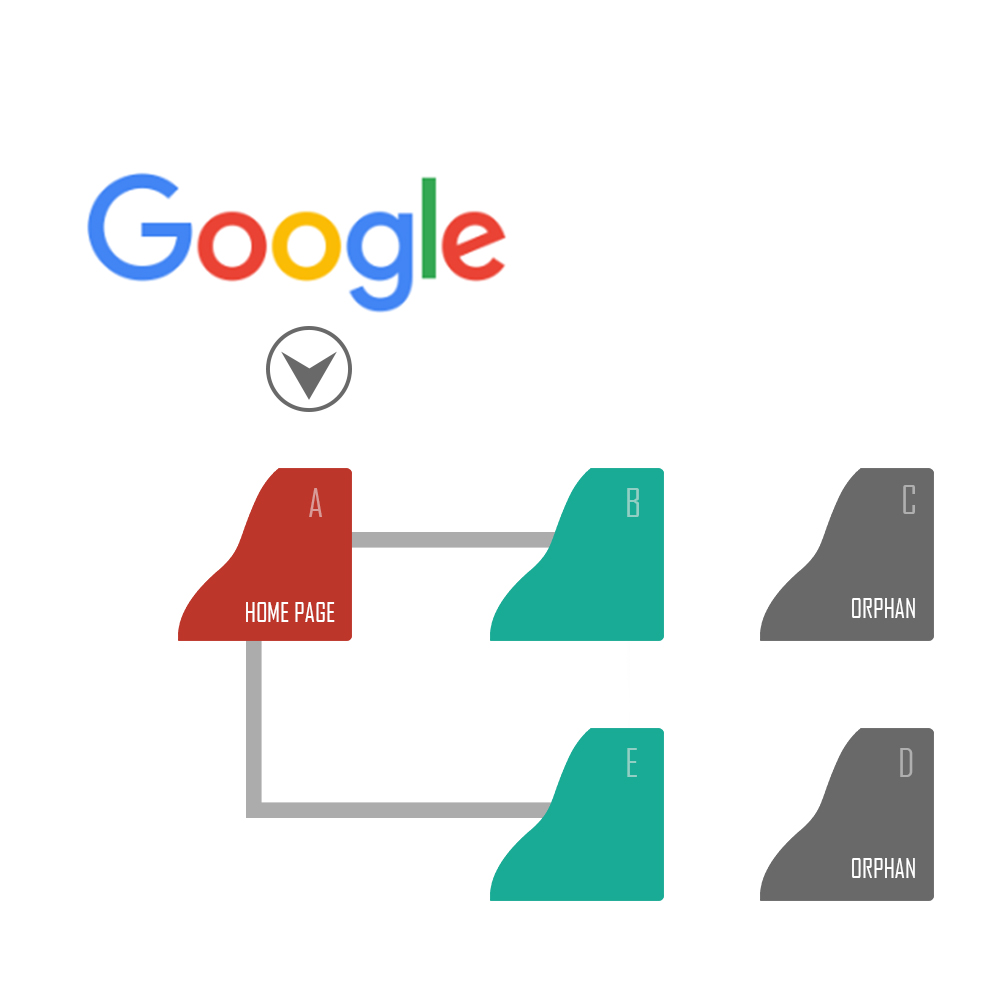

The visibility of your pages’ contents are not the only thing contributing to your website’s visibility as a whole. Search engines also need to be easily able to locate the structure of the link. This needs to be properly in place before you even think about your content. Crawlable link structures make it possible for crawlers to identify and graze over the trail of a website.

It is however an unpleasant fact that a lot of websites go ahead to structure their pathways in such a way that it becomes an uneasy task for search engines to access them. This is a huge misstep that makes it impossible for search engines to include the deviating web pages as part of their indexation. Thus, it should be foremost in the targets of web developers to adopt alongside a well developed web page, an easily accessible link structure.

Here is an example of what could occur:

Using the example above, Google’s crawler finds the links to pages B and E on page A. Since there are no links to pages C and D, Google has no idea that they exist. Whatever effort has been put into this page has no impact because Google cannot find it anyway.

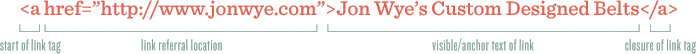

On any web page, there’s always plenty of things to click on. These can be images, buttons, portions of texts (usually highlighted in a different color to indicate that they’re also a link), etc. These links (also known as link tags, or hyperlinks) are used specifically for navigation of the website. In a website’s hyperlink, there are four different parts:.

-

- Start of a link tag. This is the first point of contact in the link.

- Link referral location. This acts as the address of the webpage.

- Visible/Anchor text of link. A portion of the whole write-up (can be an article, description, etc) that the search engines can locate.

- End of link. Closes link.

Submission-Required Forms

Some websites require you to fill out a few forms before you’re able to access the contents., These pages can be a little problematic for a few reasons. Such forms discourage users from accessing these web pages because most of them would rather not fill out the forms. And of course, these pages also run the risk of not being visible to search engines.

Links in JavaScript

When using JavaScript for your links, you may run into a few problems. Often, search engines don’t find sites that use JavaScript as important (so they may not be as easily found as HTML sites), or sometimes the search engine just can’t find it at all. If you want your site to remain visible, just HTML, or HTML alongside JavaScript are your best options.

Links and Meta Robots

Sometimes website owners will use a Meta Robots tag or the robots.txt file to reduce access to their page, simply to keep rogue bits from accessing the page. However, this can also have the unintended side effect of barricading it from search engines too.

Robots don’t use search forms

As explained above, such barriers as robot-proof tests discourage the users from accessing such web pages and also makes it difficult for search engines to crawl into such websites. The presence of a search box on a site does not guarantee that it would be found by search engines. The contents of such web pages may remain unknown for a long time.

Frames or iFrames

Even though links that are in iframes and frames can be accessed by search engines, it poses a difficulty for inexperienced users. In the end, it is mostly ignored by such users.

Links in Flash, Java, and Other Plug-Ins

When links are done in Flash or Java, they become invisible to search engines. An example is the Juggling Dog page above. It doesn’t matter the volume of information on such websites, they cannot be accessed.

Too Many Links

When a page is swarmed by hundreds, or even thousands of links, many of the links on the page will not be indexed. There should be a standard number of links on a page

![]()

Rel=”nofollow” can be used this way:

<a href=”https://moz.com” rel=”nofollow”>Lousy Punks!</a>

Links can come in different forms and with additional information. Many of these pieces of information are not put into consideration by search engines, except rel=”no follow”. This particular addition sends a message to users that the link does not means the same thing as support for the page it links to.

This sign is often interpreted in various ways by search engines. Paramount to most of the interpretations is the fact that it reduces the credibility of the link.

Are nofollow links bad?

Not so much. Regardless of the fact that they have less weight in comparison to other links, they are still a part of many link profiles. A web page that has a lot of links will also have many inbound nofollow links. This is not a disadvantage. Moz’s Ranking Factors reported that sites with high ranking had a higher percentage of inbound nofollow links than sites with lower ranking.

Google states that in most cases, they do not use the nofollow sign. This is because, the use of the nofollow sign makes Google to remove such links from their search. Such links have no value and they are often ignored during search. However, a lot of web developers believe that a nofollow link from respected sites reflects confidence.

Bing & Yahoo!

Bing, which powers Yahoo search results, also explains that nofollow links are not listed in their link graph. That notwithstanding, some crawlers still use those links to arrive at new web pages.

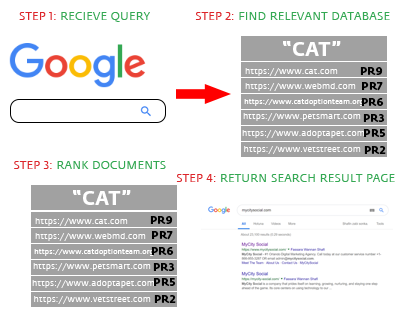

Keyword Usage and Targeting

Keywords are of extreme importance to research as well as searching or surfing web pages. Search engines do not locate information by perusing all the words available in a text. This would be time consuming and unproductive. Instead, search engines only run through the different web pages using the few keywords in their indexation to locate information. Each database is allowed to have a theme, the theme then tells us which words are used as keywords. Keywords are particularly used to being ease to web crawling. It helps search engines to locate information on web pages with little or no difficulty. Here is an example:

For a page that needs to be ranked in search results for ‘cat’, the word ‘cat’ has to be part of the keywords of the document.

Keyword Domination

We use keywords to convey what we are searching to search engines. The keywords used in our search will determine the information the search engines would locate. The way the words are spelt, ordered or punctuated will help the search engines to locate the matching pages. The way keywords are used on pages also help search engines decide the relevance of a document to a search. To increase ranking, the keywords you need ranking for should be used in the title, metadata and text. Your keywords should be precise to help , reduce the number of search results.

Keyword Abuse

In an attempt to make use of specific keywords, users may be tempted to list out more words than required on search engines. But they should not. This does almost as much damage as using the wrong keywords. This is because Iit lengthens the search and makes it harder for search engines to locate related documents. Keyword abuse refers to the overloading of words into URLs, links, meta tag and texts. Using keywords demand a level of expertise and an ability to strategize. It’s better to get more of your actual target audience with fewer keywords than more visitors overall who want nothing to with you because your keyword bank was too large.

On-Page Optimization

As discussed, access to your website is based on the use of keywords. So how, then, do we use keywords? A lot of testing is done at MyCity Social to view the results of query and shifts based on keyword usage tactics.

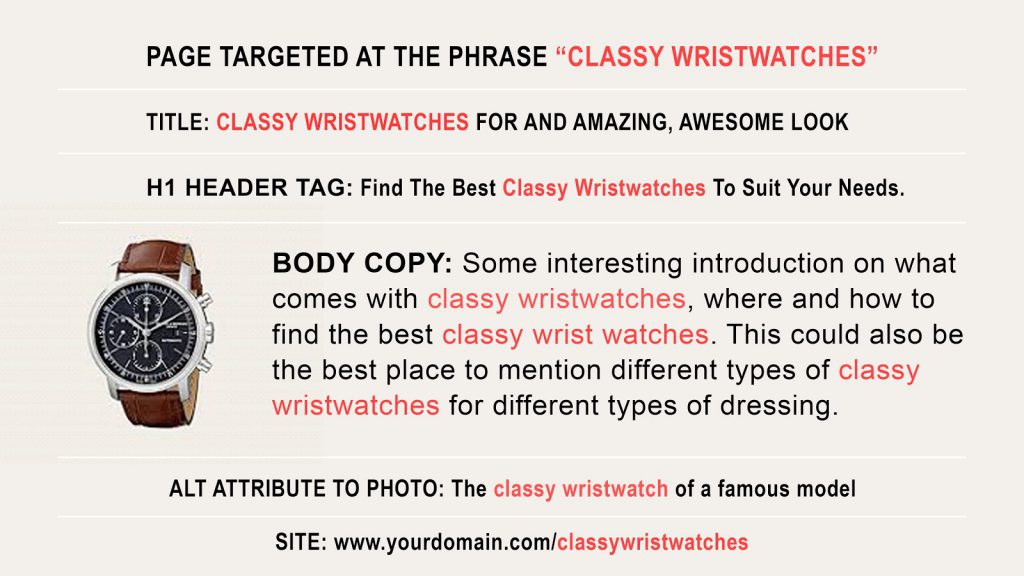

While working on the content of your web page, we advise that you use the keyword phrase in the following places:

- The title tag should contain the keyword close to its initial point.

- The keyword should be used conspicuously close to the beginning of the page.

- The keyword phrase should be repeatedly used in the body of the text, preferably in different ways.

- The alt attribute of images should also include the keyword more than once.

- The keyword phrase should be used in the URL once.

- The keyword should appear in the meta description tag at least one time.

It should be noted that Keyword Cannibalization is possible. This occurs when keywords in anchor texts of links lead to other pages.

Keyword Density Myth

Contrary to popular belief, keyword density isn’t what it sounds like.

Let’s say that two different documents (b1 and b2) have 500 terms and repeat one of those terms 10 times (tf=10). To calculate keyword density, this will be KD = 10/500 = 1/50. or It is not the function of the keyword density to state which document should be regarded as more prominent. A density analysis also does not provide information about how far keywords are to each other within documents, the co-occurrence of terms, the subject matter of the document and the precise location of the keywords in a document.

The Conclusion:

How then should optimal page density look? Check out this example. This page uses the phrase “classy wristwatches” an optimal amount of times.

For any web page, the title tag is at the top of the browsing software. When the content is shared through social media, it becomes the title.

The use of keywords in the title tag means that search engines will bold those terms in the search results whenever a user conducts search with those terms. This helps gain a greater visibility.

Keywords should be used in the title tags because of search engines’ ranking.

Title Tags

There are two things every web page title needs to be: accurate and concise. Make sure it’s on topic, and gets to the point. This affects everything from how the search engine finds it to how the user experiences the page.

Here are some basic instructions for the best quality Title Tags:

Make it as brief as possible

It is highly recommended that you constrict your title tag to 65-75 characters, because most search engines and social media sites do not display beyond this limit, instead showing ellipses (…) to indicate that the rest has been cut off. If you target too many keywords that could increase your ranking and user experience that could not be embedded within this limit however, you may consider going longer then.

Start with the most important keywords

The closer your keywords are to the start of your Title Tag, the higher your chances of better ranking on search results. This means more users will find your content and tend to click more.

Always include your brand

It is very important to add your brand name to your content. It is even recommended that you add your brand name to the end of your title tag or your homepage at least. The more people that get familiar with your brand, the higher the click-through rate on your content.

Ensure clarity, legibility and emotionally appealing.

Your title tag must be simple, clear, direct and readable to everyone. It must also be catchy and pull your viewers in so that they keep reading your content.

Meta Tags

Meta tags are created as an index where detailed information of a website is obtained. Along with their mode of operation, some meta tags are highlighted below:

Meta Robots

The Meta Robots tag is often used to control search engine crawler activity (for major search engines). Meta Robots Tag can be used to influence how a search engine treats a page in the following ways:

- index/no index is a simple instruction for search engines to determine if the page should be kept on the search engine’s index for retrieval. Search engines often index pages naturally, but could undo this if you select “no index”.

- follow/no follow search engines crawl pages by following naturally. They “follow” pages naturally too. You can however instruct a search engine to “unfollow”.

Example: <META NAME=”ROBOTS” CONTENT=”NOINDEX, NOFOLLOW”.

- No archive is a simple instruction for search engines to save a cached copy of the page. Search engines often save details of indexed pages for user’s use by default. You can however choose “no archive” to unselect such options.

- No snippet is an instruction for search engines to desist from displaying a particular block of text next to the page’s title and URL in the search results.

- noodp/noydir these are coded instructions in form of tags, instructing a snippet from picking data about a page from Yahoo! Directory or the Open Directory Project (DMOZ).

The X-Robots-Tag HTTP header directive is used to achieve the same objectives as Meta Robots Tag and it is particularly used for content within non-HTML files.

Meta Description

The meta description tag is a brief description of a page’s content, and the main source for the content extract usually displayed under the title tag. The content of this section is not usually used for SEO by search engines, but it provides enough data to arouse user’s interest.

Its ultimate assignment is to attract readers and advertise your content. It is very important to create a simple, legible, catchy and interesting Meta description tag to maximize search marketing. Including Keywords can also increase the rate of click-through on your content.

Search engines cut and constrict the meta description tag to 160 characters by default, so try to stay within this limit. However, it is possible to write beyond 160 characters, since extract cuts by search engine can still be visited by users.

Where no meta description is available, search engines often auto select keywords searched from the content. This is particularly helpful for contents overflowing with multiple keywords.

Not as important meta tags

Meta Keywords: Meta descriptions are not as important as they used to appear. You may check out why meta keywords has fallen into disuse, here Meta Keywords Tag 101..

Meta Refresh, Meta Revisit-after, Meta Content-type, and others:

Besides Meta description tags, these are other useful but less important tags for SEO. You may check out the little uses they could render on Google’s Search Console Help.

URL Structures

URLs—this is the address through which user can locate your content—it is also important on search value. You can make it appear in multiple locations.

By default, search engines display URLS with search results which means they guarantee click-through to the content page. These URLs are used in ranking documents, and pages whose names and addresses include the searched keywords are considered first in ranking.

URL structure and design bear little importance to search engines, its accuracy level and pattern of design can however create a negative or enjoyable user experience.

URL Construction Guidelines

Be realistic

Assume yourself as the user going through URLS, consider how much you will enjoy the experience of guessing the content of a page by its URL. Indubitably, this makes it easier, quicker and generate more click-through. You can easily generate user floods for your page by creating URLs that gives not necessarily details but insight into your content.

Make it brief

Despite the importance of explicitness, you need to make it brief. Make it as explanatory and brief as possible to guarantee that it is easier to copy and paste in mails, blogs and so forth, it can also be seen in full in search results.

Use but don’t overuse Keywords

If you have a very specific topic in your search engine, you should include it in your URL. But you should make sure that you do not overload your URL with Keywords, because this could trigger spam filters and render your URL unusable since it will not be found.

Ensure stability

URLs such as https://mycitysocial.com/?id=167 can be transformed into a more stable and user friendly version such as https://www.mycitysocial.com/reputation-management-services/ using technologies like mod_rewrite for Apache and ISAPI_rewrite for Microsoft. It is recommended that you design your URLS to be as simple and human readable as possible. This makes for enjoyable user experience, therefore increasing click-through rate and overall ranking.

Use hyphens to separate words

Some search engines and web applications cannot accurately interpret signs such as plus sign (+), spaces (%20) and underscores (_) as word separators. You can assure a better experience for users and eliminate search engine constraints by using hyphen (-) instead, since it is generally recognized by all search engines. This is seen in the “google-fresh-factor” URL example above.

Canonical and Duplicate Versions of Content

Duplicate content: Search engines have only been of help by assigning lower ranks to pages with thin or duplicate content, subsequently cracking them down.

Canonicalization is used to describe a situation where two or more duplicated versions of a webpage appear on different URLs, it is often noticed in Modern Content Management Systems. For instance, you may find a regular version of a page and a print-optimized version of a page, but be prepared, you would find duplicate contents on many more websites. This poses a big problem to search engines, “which of these websites should be displayed to users?”. You may here check out details of such problems here duplicate content.

Search engines often attempt to avoid displaying versions of the same content. They often display diverse URLs and data to provide a wide range of experience to the users. The effect of this on duplicated material is that search engines only try to decipher the genuine website and rank the others lower.

The process of safeguarding your content such that there is ONLY ONE URL to EVERY PIECE is called Canonicalization. If you leave multiple versions of your content or websites, searched engines will be faced with a problem as that stated here, which diamond is the right one?

If the search owner took the three pages and redirected them to one, the search engines can easily refer to the only strong and real page. When such is done, these pages would stop competing, generate more click-through since users can access the details from multiple locations. Increase your ranking on search engines and establish a stronger relevancy on the content type.

If the search owner took the three pages and redirected them to one, the search engines can easily refer to the only strong and real page. When such is done, these pages would stop competing, generate more click-through since users can access the details from multiple locations. Increase your ranking on search engines and establish a stronger relevancy on the content type.

Canonical Tag to the rescue!

Canonical Tag is the perfect solution generated to eliminate content duplication. It is known as Canonical URL Tag, is another way to reduce instances of duplicate content on a single site and canonicalize to an individual URL. This is the perfect solution generated to eliminate content duplication across different websites, from URLs on a domain to a different URL on a different domain.

It is easily done by adding a canonical tag on the page with the duplicated content. This makes it link the URL of the duplicated content to the master URL.

<link rel=”canonical” href=”https://mycitysocial.com/blog”/> This instructs search engines to treat the current page as if it were a copy of the URL https://mycitysocial.com/blog and that all of the link and content metrics the engines apply should flow back to that URL.

<link rel=”canonical” href=”https://mycitysocial.com/blog”/> This instructs search engines to treat the current page as if it were a copy of the URL https://mycitysocial.com/blog and that all of the link and content metrics the engines apply should flow back to that URL.

This has maximally reduced the problem of ranking a URL and its content lower from a SEO perspective. It does not redirect users from the multiple pages to the strong page, rather it treats them as one.

You may check out more on duplicate posts in this post by Dr. Pete.

Rich Snippets

Have you seen ever wondered how some contents make 5 star rating on search engine? Well it’s most times because the information or content is supplied from rich snippets employed on the source page. Rich snippets are subtle ways to design your content and program it to search engines such that full information is supplied to the search engines and they can easily the searched keywords with your page content.

It is not necessary to use Rich Snippets before SEOs can rank your information well. But you should note that with the growing use of Rich Snippets, those who use it stand an advantage than those who don’t sometimes.

You also need to organize your information well enough to ensure that even search engines can easily identify the type of content you present. You may find instances of data that can benefit from well-organized data such as products, reviews, businesses, recipes and so forth on Schema.org

Structure data are easy to find in search results, and are often use to rank contents with stars. They also provide proper information of authors. Rich snippets is a boost for SEO, and you may check out more on information at Schema.org, and Google’s Rich Snippet Testing Tool.

Rich Snippets in the WildIf you announce an SEO conference on your blog, you may have an HTML that seem like this: <div> SEO Conference<br/> Learn about SEO from experts in the field.<br/> Event date:<br/> May 8, 7:30pm </div> By structuring your data using Rich Snippets, you may send precise details that are easily understood to search engines, they would like seem like this: <div =”http://schema.org/Event”> <div =”name”>SEO Conference</div> <span =”description”>Learn about SEO from experts in the field.</span> Event date: <time =”startDate” datetime=”2012-05-08T19:30″>May 8, 7:30pm</time> </div> |

Defending Your Site's Honor

How scrapers steal your rankings

In the computer village the world has become today, it is quite unfortunate that many websites and organizations now depend on plagiarizing information from other domains and modifying them to suit their own desire. That act of stealing others’ content and republishing as yours is called SCRAPING, and the disheartening report is that scrapers appear to be more productive, more optimized on search engines than the original content webpages.

This is why you must update the major blogging services such as Yahoo!, Google and so forth when you publish a content in any form. Wondering how to do it? You can read up more on Pingomatic to ensure that pinging or major blogging services uploading is not only feasible but routine on every publication. Many publishing software computerize this activity and you may auto-activate this process if not built by the software.

This can safeguard your content to a great extent, and you can heighten your security against scrapers by using scraper’s laziness. How does this work? Just include links to various pages on your site and scrapers would be redirecting their users back to your site and the original information your published without noticing, since they are likely not to note the links you have attached as a result of their natural laziness. You will require outright links that consistently returns users to your page in order to achieve this. So, instead of using <a href=”../”>Home</a> to return users to your page, You would instead use:<a href=”https://mycitysocial.com”>Home</a>.

The higher publicity and click-through your page gets, the higher the chances of scrapping, content duplication and republishing. You could try out some of these anti-scraping strategies suggested, among others. You should however note that no strategy absolutely safeguards information at the moment. It is sometimes advisable to overlook republishing and scrapping, until it poses a threat to your network, your traffic and of course your ranking. You may check out some pieces of helpful hints on this issue here, 5 Easy to Use Tools to Effectively Find and Remove Stolen Content