CHAPTER 8 | SEO Tools & Protocols

Increasing Popularity and Links

There are lots of SEO tools that are available for use. The very instrumental ones are provided by the search engines. Since it is important to search engines that web pages are constructed to allow access. This helps them to make available a number of tools, guidance and analytics. These resources help search engines to give and take information.

There are general substances supported by the main search engines. Here, we will explain why they are instrumental.

Common Search Engine Protocols

1. Sitemaps

Sitemaps are a list of files that provide search engines with cues about the best way to crawl web pages. The contents that might not be located by search engines are found through sitemaps, they are also arranged by sitemaps. These sitemaps are in different designs, it is also possible for them to highlight various kinds of content such as images, mobile, news and videos.

You can view the complete information about the protocols at Sitemaps.org. Also, it’s possible to build your sitemaps at XML-Sitemaps.com. Sitemaps are in three different forms:

XML

Extensible Markup Language (recommended format)

* For sitemaps, it is the most adequate format. Search engines find it very easy to analyse its parts and a variety a sitemap generators can produce it. Also, XML enhances the regulation of page parameters in units.

- XML is characterized by large files. The use of an open tag and a close tag around each other increases file sizes.

- RSS

Really Simple Syndication or Rich Site Summary

- RSS is relatively not difficult to maintain. RSS sitemaps can be programmed to update automatically with the addition of another content.

- As much as RSS is an offshoot of HML, it is more difficult to manage because of the updating qualities.

Txt

Text File

- Text files are very simple. The layout of text sitemap is one URL per line up to 50,000 lines.

- Using Text files, meta data cannot be provided on pages.

2. Robots.txt

The robots.txt file as an extension of the Robots Exclusion Protocol, is a file kept in a website’s root directory (e.g., www.google.com/robots.txt). Both search engines and web crawlers are given instructions by the the robots.txt file.

Using robots.txt, webmasters are able to show search engines the parts of the site where bots are not permitted to crawl, what sitemaps are as well as crawl-delay parameters. If you are interested in more details about this, you could check the robots.txt Knowledge Center page.

These commands below are assessable:

Disallow

This stops cooperative robots from crawling some pages and folders.

Sitemap

This shows where a website’s sitemap or sitemaps is located.

Crawl Delay

This displays the speed within seconds, possible for a robot to crawl a server.

This displays the speed within seconds, possible for a robot to crawl a server.

An Example of Robots.txt

#Robots.txt www.example.com/robots.txt

User-agent: *

Disallow:

# Don’t allow spambot to crawl any pages

User-agent: spambot

disallow: /

sitemap:www.example.com/sitemap.xml

Warning: It is important for webmasters to know that not all web robots obey the robots.txt. Users with such questionable aims like scrapping e-mail addresses or hacking websites often make robots that do not regard this protocol. Considering this, the robots.txt file should not contain the location of private sections of websites that are assessed by the public. The meta robots tag is more appropriate for storing such sensitive content.

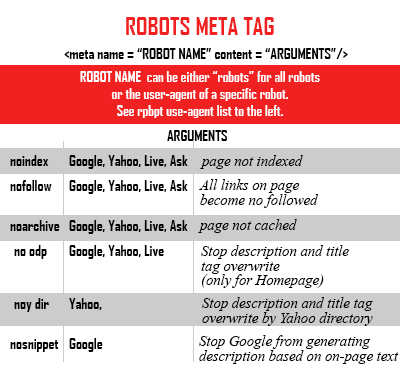

3. Meta Robots

Search engine bots receive pay level instructions from the meta robots tag.

The meta robots tag should also be added to the head section of the HTML document.

An Example of Meta Robots

<html>

<head>

<title>The Best Webpage on the Internet</title>

<meta name=”ROBOTS” content=”NOINDEX, NOFOLLOW”>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>

Using the above example, “NOINDEX, NOFOLLOW” informs robots not to add the specific page to their indexation. It also warns them against following the links on the page.

4. Rel=”Nofollow”

Do you recall the way links act as votes? The rel=nofollow attribute affords you the opportunity to link to a resource and at the same time, you can withdraw your ‘vote’ for the purpose of search engines. The essence of ‘nofollow’ is to inform search engines not to follow the link, some still do, notwithstanding. In any case, the links end up having reduced worth compared to other links. However, they can be useful in various situations where link is followed to another source.

Below is an example of nofollow

<a href=”http://www.example.com” title=”Example” rel=”nofollow”>Example Link</a>

In the example given above, as long as the rel=nofollow is included, the link’s value would not reach example.com.

5. Rel=”canonical”

Most often than not, a couple of copies or more of the same content could surface on your website using non identical URLs. For instance, it is possible for the URLs below to refer to one homepage :

- http://www.example.com/

- http://www.example.com/default.asp

- http://example.com/

- http://example.com/default.asp

- http://Example.com/Default.asp

Search engines view all these as five different pages. Search engines could however, end up devaluing the content and its rankings because of the similarity of each page.

The canonical tag helps to resolve this issue. It supplies search robots on information about which page has a singular authoritative form that should be used in web results.

An Example of rel=”canonical” for the URL http://example.com/default.asp

<html>

<head>

<title>The Best Webpage on the Internet</title>

<link rel=”canonical” href=”http://www.example.com”>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>

In the above example, rel=canonical informs robots that this particular page is a copy of http://www.example.com, and should view the last URL as the canonical and authoritative one.

SEO Tools

Google Search Console

Google Search Console is commonly used across corporations for SEO analysis. It is also one of the best SEO tools available today. Explore Google Search Console today!

Key Features

Geographic Target – For sites that are particular about the users in a certain location, webmasters should be careful enough to give Google information about the way the site would appear in the search results of such a country. This would enhance search results for geographic enquiries.

Preferred Domain – The preferred domain refers to the domain a webmaster considers appropriate for indexing their web pages. For example, If a webmaster states a preferred domain as http://www.example.com and Google locates a link to that site that is formatted as http://example.com, Google will view that link as referring to http://www.example.com.

URL Parameters – It is possible to show Google information about each parameter on your site, such as “sort=price” and “sessionid=2“. This enables Google to crawl your site more productively.

Crawl Rate – This influences the speed (and not the frequency) of the requests of Googlebot in the course of crawling.

Malware – Google reports to site owners whenever it finds any malware on the site. Malware negativity affects the user experience and reduces the site’s rankings.

Crawl Errors – Googlebot also reports significant errors it comes across in the process of crawling. An example of such errors is 404s.

HTML Suggestions – Google identifies search engine-unfriendly HTML elements such as issues with meta descriptions and title tags.

Your Site on the Web

SEO tools make certain statistics available. They are useful in providing an understanding of SEOs. Such statistics include top pages, keyword impressions, click-through rates.

Site Configuration

This section is instrumental for a number of reasons such as examining robots.txt files, tendering sitemaps, submitting requests for change of address and correcting sitelinks . It also consists of the Settings and URL parameter sections.

+1 Metrics

When the content of your site is shared on Google+ with the +1 button, most often than not, it is annotated in search results. Watch this illuminating video on Google+ to have an insight into the essence of this. In this section, Google Search Console informs site owners about the effect of +1 sharing on the performance of their sites in search results.

Labs

The Labs section of Search Console contains reports that Google views as being in the experimental stage, but notwithstanding is instrumental to webmasters. Site Performance remains one of the most crucial among these reports. It shows the speed at which a site loads for users.

Bing Webmaster Tools

Key Features

Sites Overview – This gives a summary of a site’s ranking in the search results powered by Bing. Information about Impressions, clicks, pages indexed, number of pages crawled are all available at a glance.

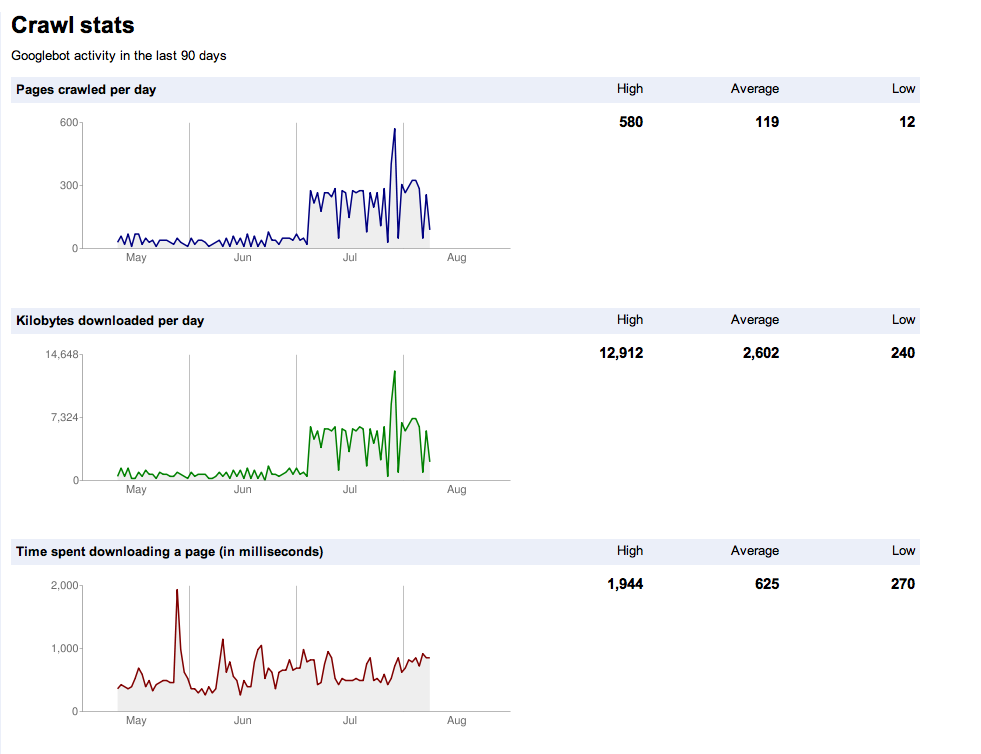

Crawl Stats – This gives you information about the number of pages on your site that Bing has crawled as well as the errors faced. Submission of sitemaps can also be done here to bring your content under Bing’s radar.

Index – Here, webmasters are made to see and control how their web pages are indexed by Bing. Also, as done in Google Search Console, site owners can examine the layout of their content within Bing, as well as tender URLs or remove them from search results, examine links and correct the settings of parameter

Traffic – The traffic summary in Bing Webmaster Tools gives reports on both impressions and click-through data. This is done by merging data from both Bing and Yahoo search results. Average position and cost estimates of ads focusing on keywords are featured in the reports

It is not until recently that search engines embarked on making preferable tools available to webmasters for the purpose of improving their search results. This is considered as a great improvement in SEO as well as the search engine/webmaster relationship. It should be noted that there are limitations on how far engines can aid webmasters. The most important duties concerning SEO continue to rest on webmasters and marketers.

This is why it is completely necessary for you to learn SEO personally.